Sparsity with multiple types of features

In this project we study regularization strategies that can handle different types of predictors (e.g., factor, continuous, nominal, ordinal, spatial). We focus on the use of a Lasso penalty that acts on the objective function as a sum of subpenalties, one for each type of predictor. As such, we allow for predictor selection and level fusion within a predictor in a data-driven way, simultaneous with the parameter estimation process.

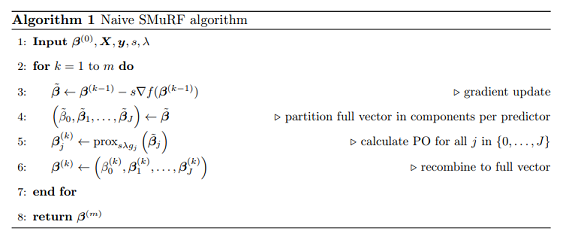

We develop a new estimation strategy for convex predictive models with this multi-type penalty. Using the theory of proximal operators, our estimation procedure is computationally efficient, partitioning the overall optimization problem into easier to solve subproblems, specific for each predictor type and its associated penalty. Earlier research applies approximations to non-differentiable penalties to solve the optimization problem. The proposed SMuRF algorithm removes the need for approximations and achieves a higher accuracy and computational efficiency. This is demonstrated with an extensive simulation study and the analysis of a case-study on insurance pricing analytics.

This is joint work with Sander Devriendt, Tom Reynkens and Roel Verbelen.

R package is available on CRAN, see smurf package. Source code on GitLab, see here.